-

- Downloads

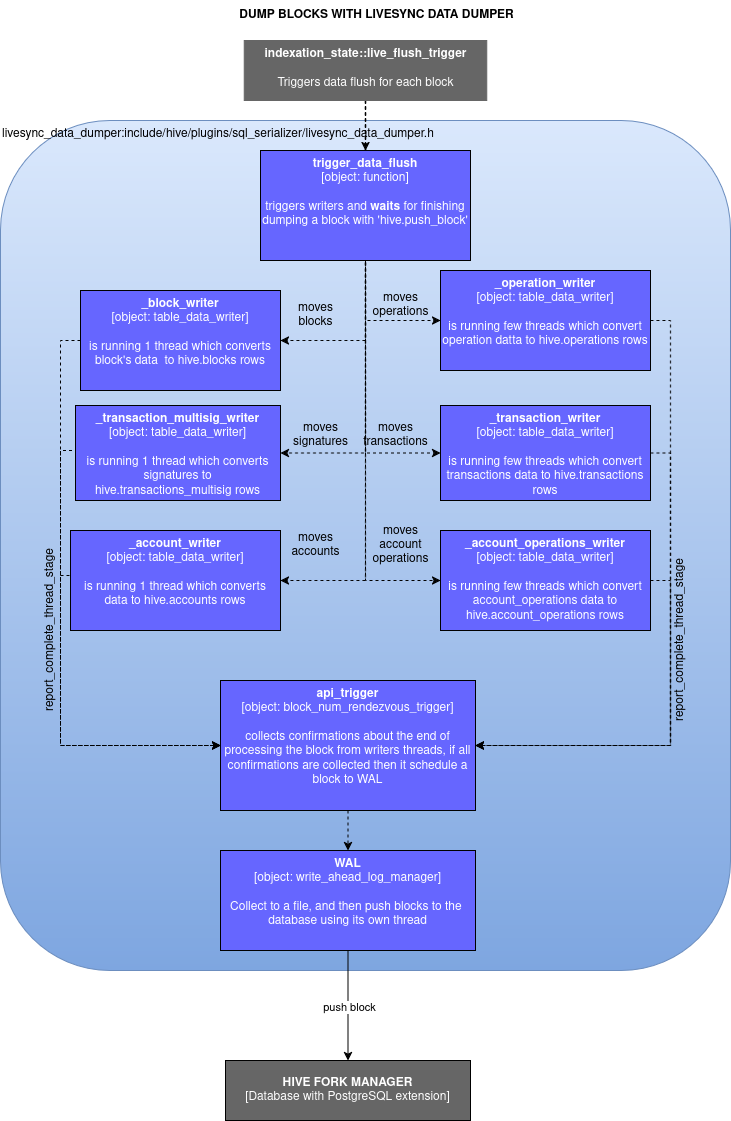

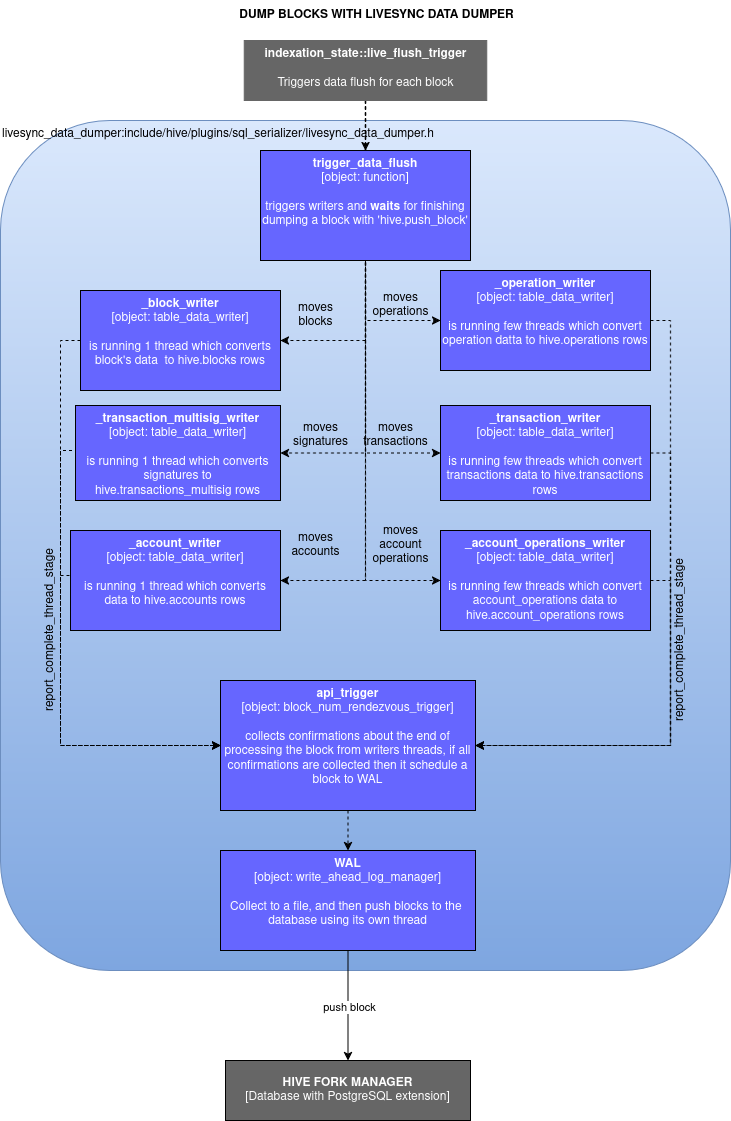

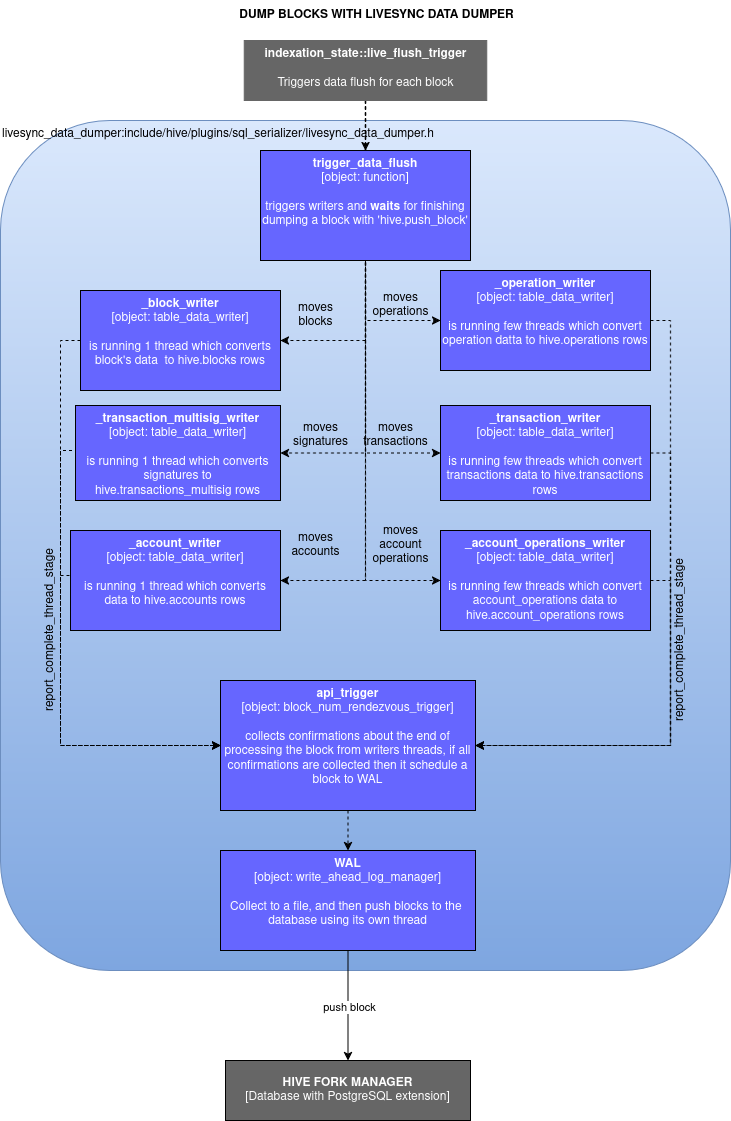

update doc: WAL

parent

933662a0

No related branches found

No related tags found

Pipeline #118763 canceled

Stage: build_and_test_phase_1

Stage: build_and_test_phase_2

Stage: cleanup

| W: | H:

| W: | H:

116 KiB | W: | H:

177 KiB | W: | H: