-

- Downloads

restructure few sections and starting points

parent

ff648204

No related branches found

No related tags found

Showing

- _config.yml 7 additions, 7 deletions_config.yml

- _data/apidefinitions/broadcast_ops.yml 5 additions, 5 deletions_data/apidefinitions/broadcast_ops.yml

- _data/apidefinitions/broadcast_ops_communities.yml 10 additions, 10 deletions_data/apidefinitions/broadcast_ops_communities.yml

- _data/nav.yml 12 additions, 12 deletions_data/nav.yml

- _introduction/web3.md 23 additions, 0 deletions_introduction/web3.md

- _introduction/welcome.md 3 additions, 19 deletions_introduction/welcome.md

- _introduction/workflow.md 10 additions, 0 deletions_introduction/workflow.md

- _quickstart/authentication.md 71 additions, 0 deletions_quickstart/authentication.md

- _quickstart/choose_library.md 6 additions, 3 deletions_quickstart/choose_library.md

- _quickstart/fetch_broadcast.md 19 additions, 0 deletions_quickstart/fetch_broadcast.md

- _quickstart/hive_full_nodes.md 115 additions, 54 deletions_quickstart/hive_full_nodes.md

- _quickstart/testnet.md 1 addition, 1 deletion_quickstart/testnet.md

- _resources/hivesigner_libs.md 1 addition, 1 deletion_resources/hivesigner_libs.md

- _services/hivesigner.md 2 additions, 2 deletions_services/hivesigner.md

- _tutorials-javascript/hivesigner.md 1 addition, 1 deletion_tutorials-javascript/hivesigner.md

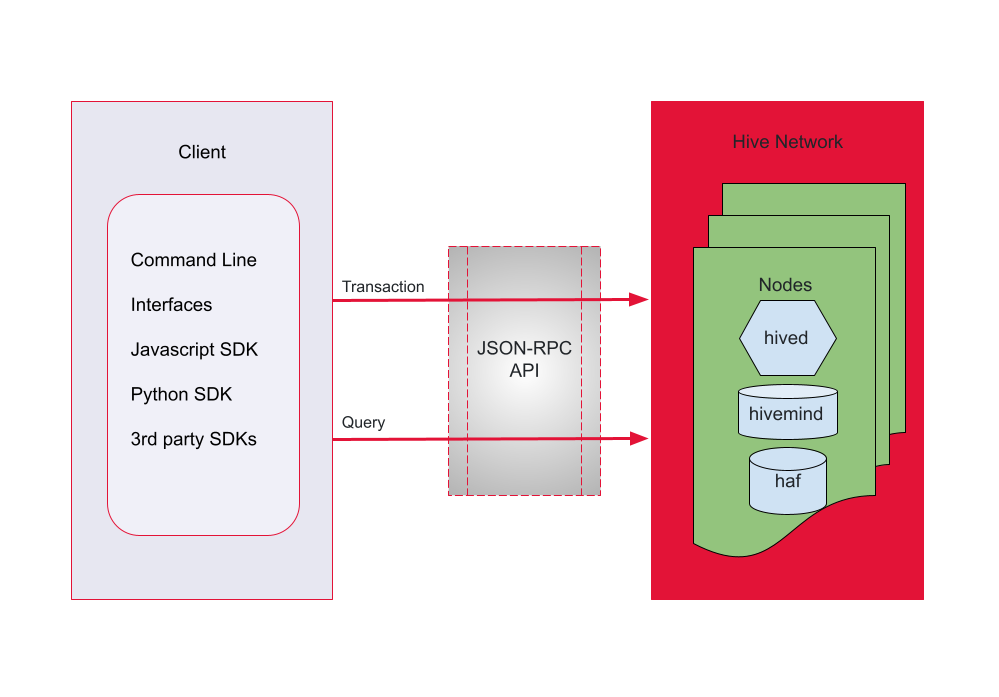

- images/hive-dev-structure.png 0 additions, 0 deletionsimages/hive-dev-structure.png

_introduction/web3.md

0 → 100644

_introduction/workflow.md

0 → 100644

_quickstart/authentication.md

0 → 100644

_quickstart/fetch_broadcast.md

0 → 100644

images/hive-dev-structure.png

0 → 100644

65.1 KiB